Hi Every one,

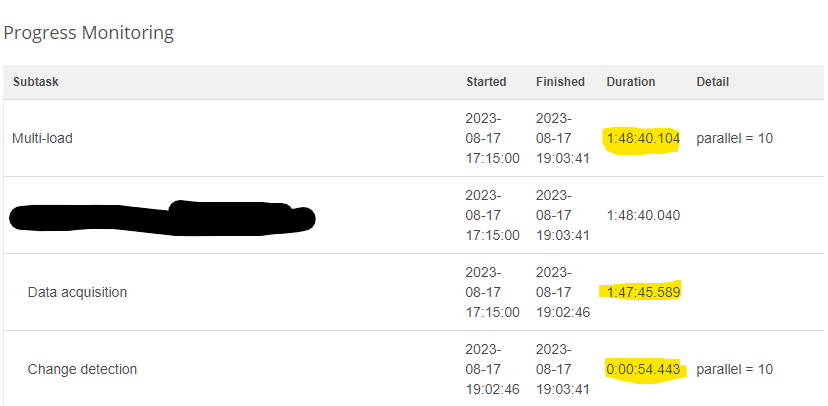

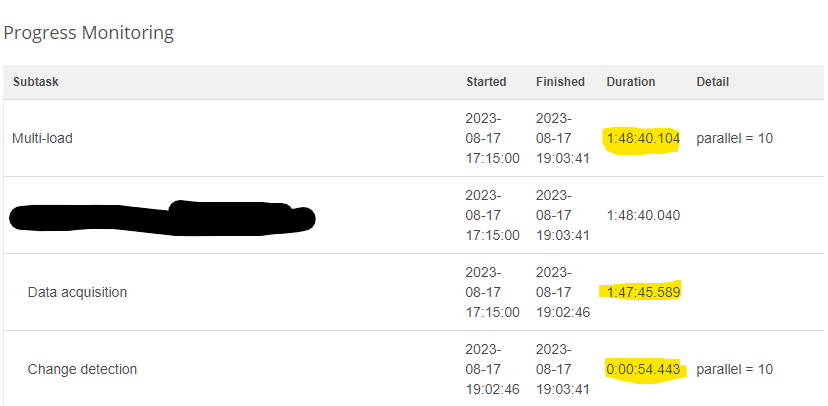

Why the data acquisition in a multi-load operation could be so slow?

Checking the database, the query to get the data is taking less than 10 minutes. Do you have any guidance about it?

Hi Every one,

Why the data acquisition in a multi-load operation could be so slow?

Checking the database, the query to get the data is taking less than 10 minutes. Do you have any guidance about it?

Hi

The Data Acquisition step (Load Plan Execution) not only executes the query on the source system site but also fetches all the data to the MDM Server. Therefore, if you have a large dataset, transferring all the data can be quite time-consuming.

It's hard to suggest anything without knowledge of the solution, but here are some basic checks you can perform to narrow down the problem. As I understand, in the load plan, you fetch data from a database, probably using the JDBC Reader step.

Hey!

You are right! I found another query fetching more data than expected.

Thank you!

No account yet? Create an account

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.