Hi everyone!

This week, we’ll continue covering the latest features and updates in 14.5 ⚡Today on the menu we have ONE updates and features in 14.5. Let’s dive in 🤿

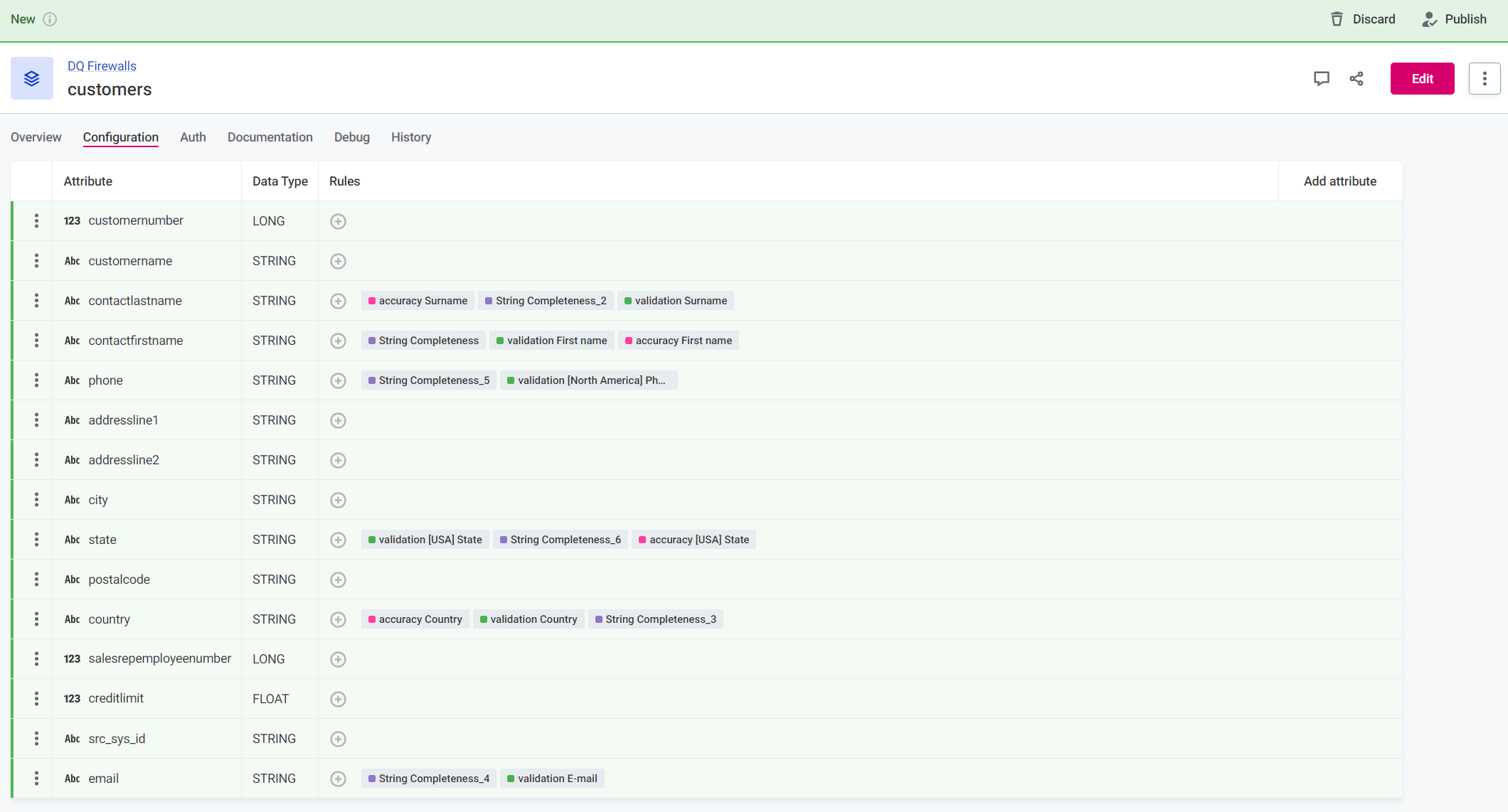

DQ Firewalls 🧯

DQ Firewalls allow you to apply data quality rules to your data using API calls, specifically, the evaluation APIs provided by the new DQF Service. Both GraphQL and REST options are available.

This allows you to be able to maintain one central rule library and use Ataccama data quality evaluation rules on your data in real-time in the data stream.

For example, you have an ETL pipeline in Python that processes data, and you want to make sure that it filters out invalid records. After defining the DQ rule in ONE, the pipeline for each record (or batch of records) can call the DQF endpoint, and records will be split up by their validity.

DQF Service

With 14.5 we have a new DQF service that handles rule debug in rules and in monitoring projects, as well as allows live data quality validation in ONE Data and the new DQ firewall feature.

Two types of API are provided by the service:

- Management APIs: These APIs configure the service itself. They are primarily called by the Metadata Management Module (MMM) to create a new DQ firewall, get DQ firewall statistics (such as average execution time, number of calls, pass and fail ratio), configure global default authentication and ad hoc rule evaluation.

- Evaluation APIs: APIs that accept actual data and return results of DQ evaluation, that is, the calls used for the DQ firewall feature. They are always bound to a specific firewall configuration, which also defines authentication.

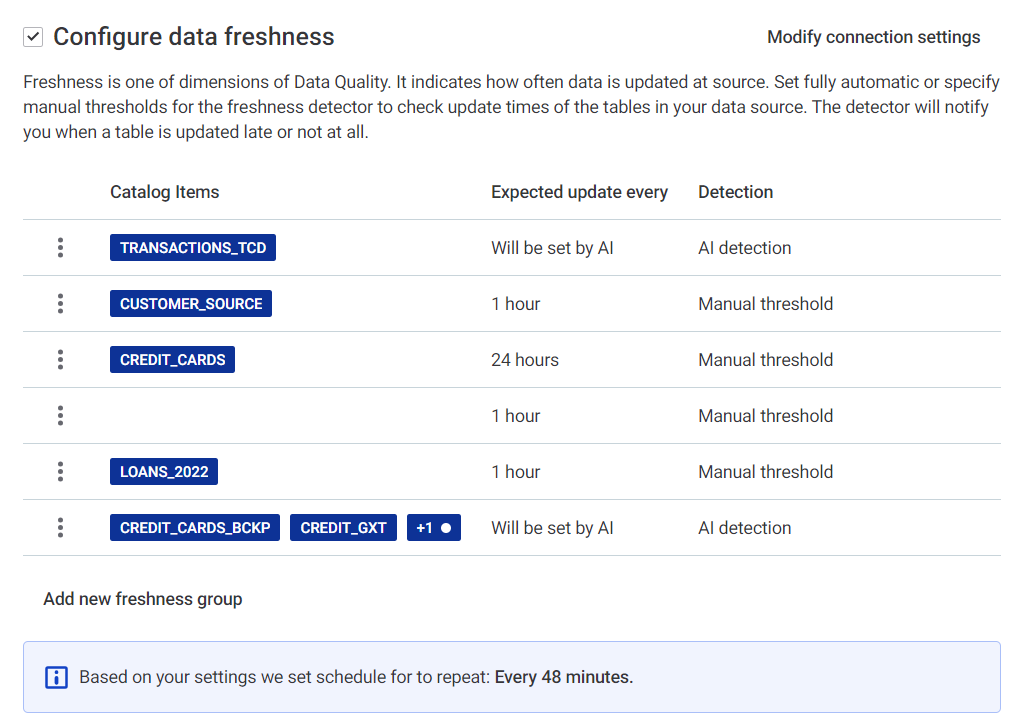

Freshness Checks Added to Data Observability ✅

Freshness is an essential factor for good data quality and it indicates how often data is updated at the source. You can now set thresholds for freshness either manually or using AI, and the system checks your data source for updates, alerting you when a table is updated late or not at all.

On the dashboards, you can see information such as the number of missing updates, the time between the last update and the last check, the expected time between updates, and the detection type (AI or manual).

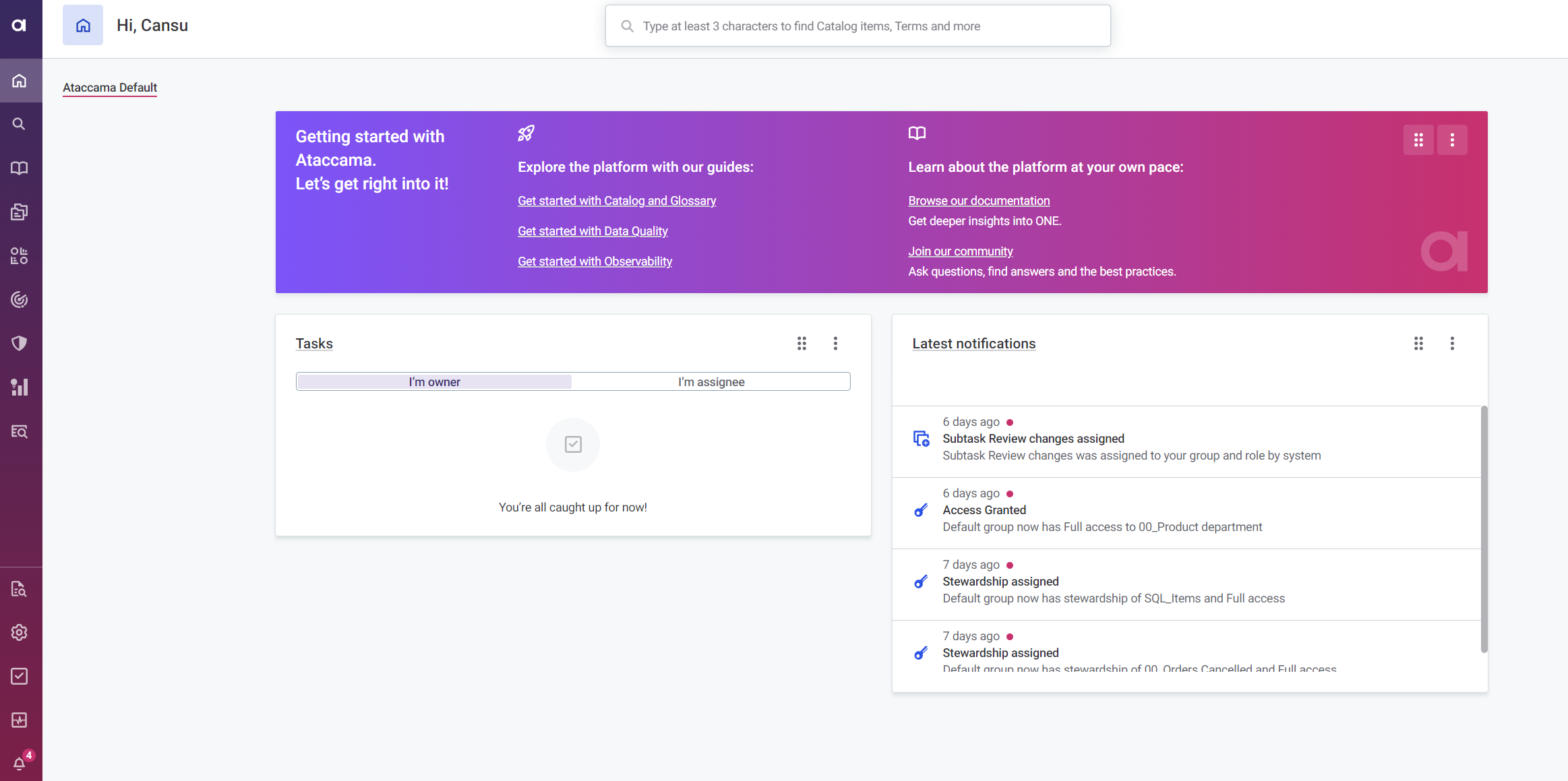

Home Page 🏡

We now support two additional widgets:

- Getting started with Ataccama: Widget containing links to onboarding sections within our documentation and community webpages.

- Tasks: Manage and track your tasks, assign tasks to others, set due dates, and monitor progress.

Additionally, access to landing pages is now managed through a new View page access level. Viewers with roles that include this access level can view landing pages and their content but cannot make any changes.

New Default Templates for Notifications 🔔

Slack, MS Teams, and email notifications now use new default templates. Custom templates from older versions will still be useable after upgrade, but if you want to make further edits to custom templates, you must convert them to the new template syntax.

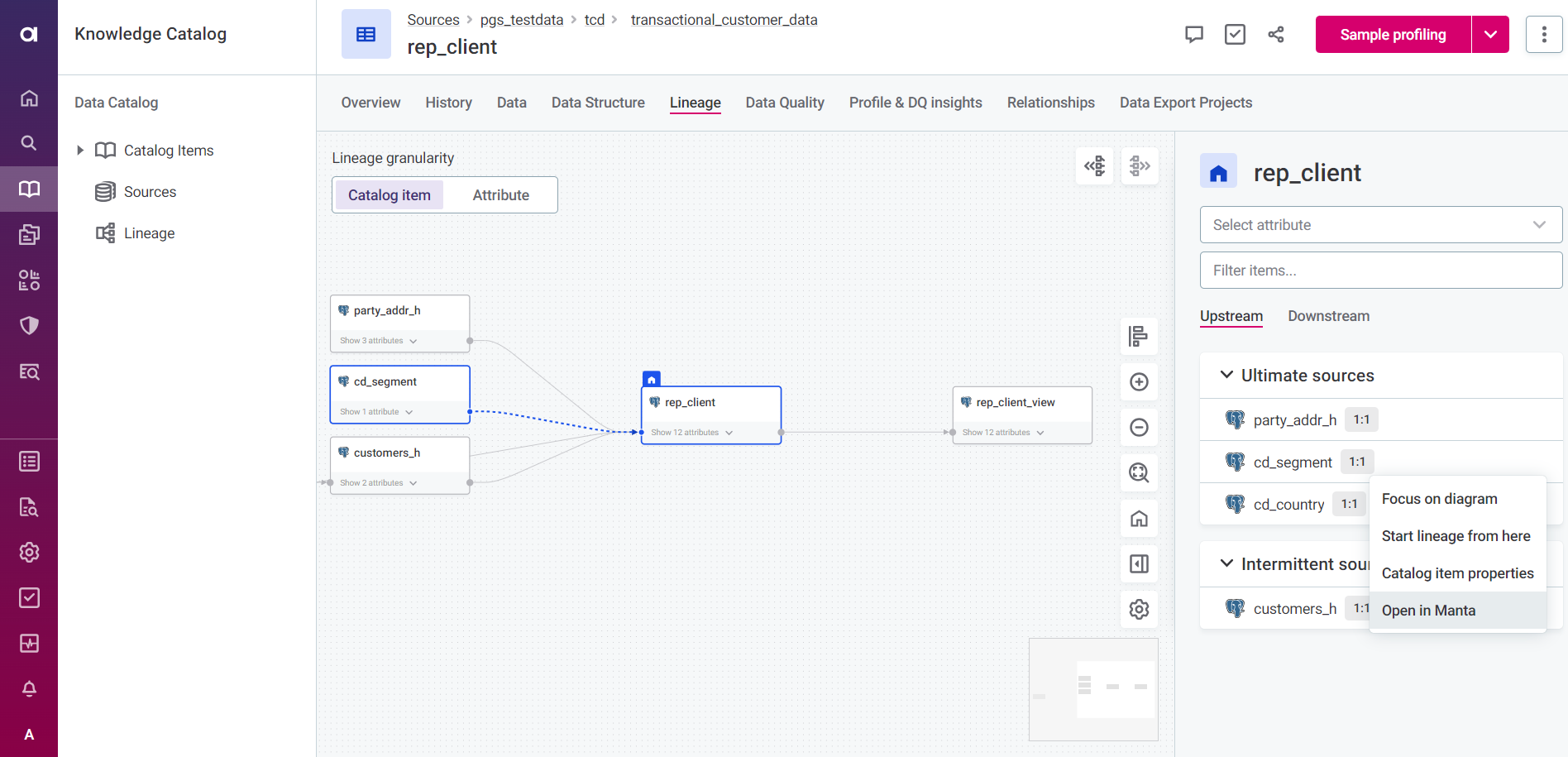

Improvements to MANTA Lineage Integration 🖇️

Thanks to all community members who have contributed with their time and feedback here, we have updated how you import and explore lineage metadata from MANTA in ONE.

Previously, browsing lineage in ONE required a direct connection to MANTA. With 14.5, you first generate a compatible snapshot of lineage metadata in MANTA and then import and map it to relevant data sources in ONE. We have also expanded the support for lineage to additional connection types.

We have redesigned the catalog item Lineage tab to make the navigation more intuitive and introduced new exploration options such as searching (or filtering) source or target items, opening the selected item in MANTA viewer, or viewing transformation details for attributes that are subject to complex transformation.

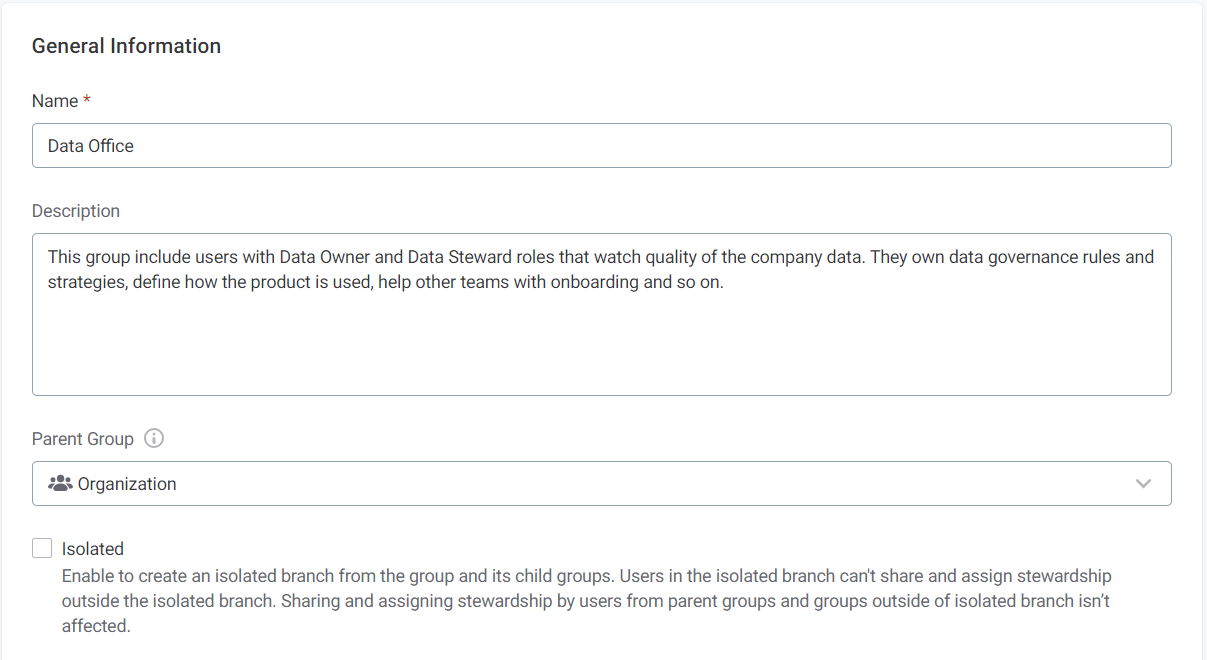

Group Isolation 🌿

You can now create isolated groups or branches, which consist of an isolated group and children. When a group is isolated, members of the group or branch are restricted from sharing assets and assigning stewardship to assets outside of their group or branch.|

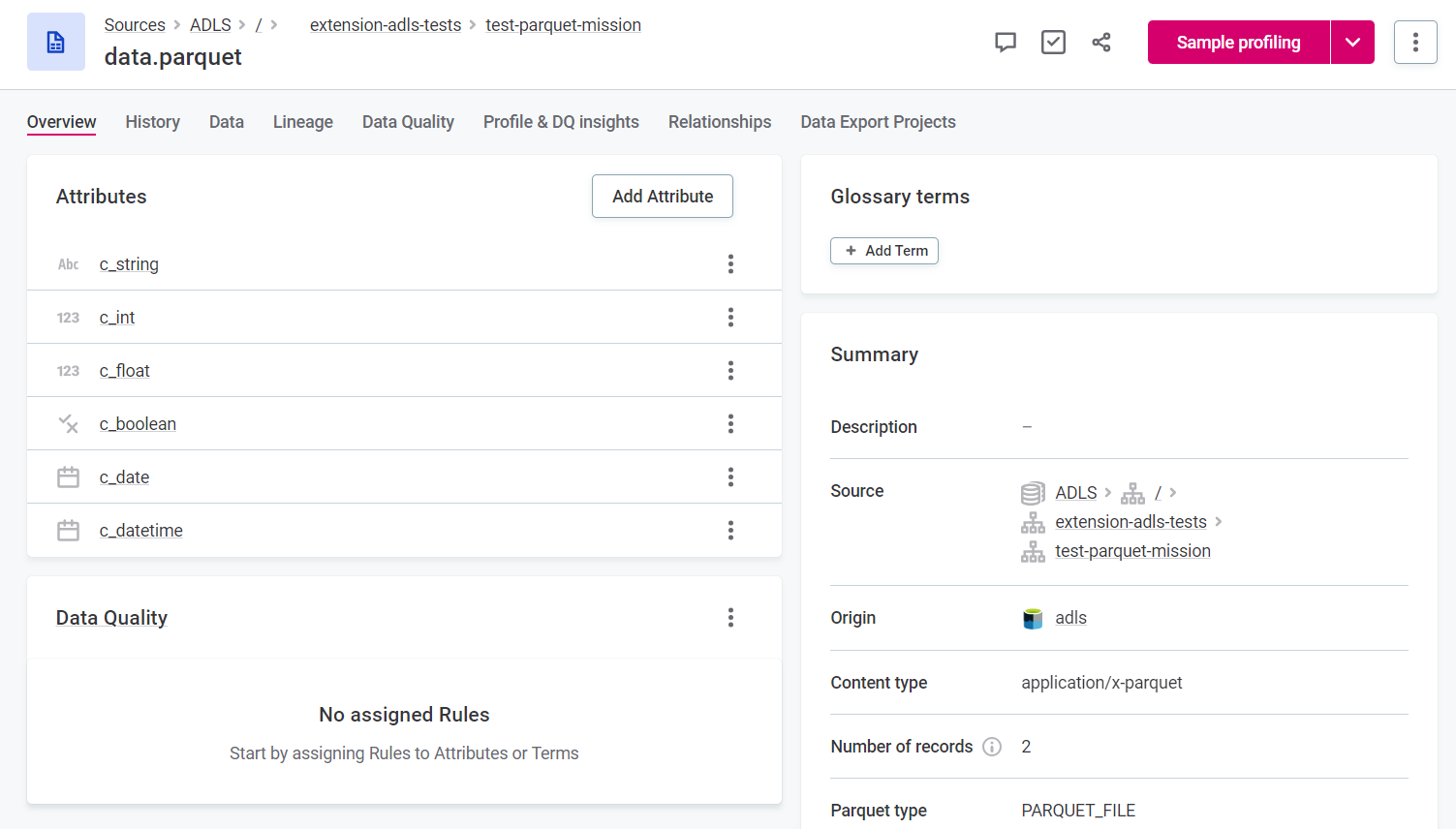

Support for Apache Parquet 👍

We have added support for the following Apache Parquet assets: files, tables, and partitioned tables. When assets other than Parquet files are imported, ONE analyzes the asset and then creates a catalog item with the attributes based on the asset.

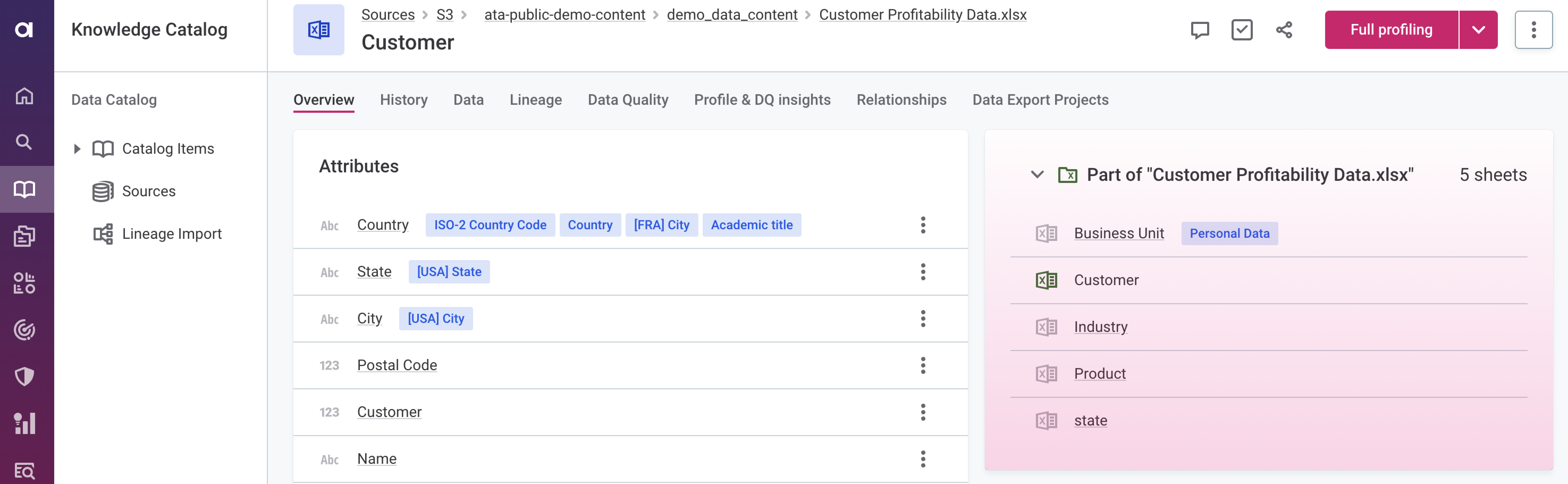

Support for Microsoft Excel 🔢

You can now work with Microsoft Excel files in ONE! When you import a Microsoft Excel file, each sheet is loaded as a separate catalog item, while the file itself corresponds to a location within your data source. This way, you can see all catalog items imported from the same Microsoft Excel file.

Check the catalog item Relationships or Overview tab to see a list of other catalog items from the single file import. You can profile and evaluate catalog items imported from Microsoft Excel files as you would any other catalog item. Previously, Microsoft Excel files could be imported to ONE, but no further processing was possible.

Support for Italian Language

You can now change the language of the application to Italian.

Audit Log Retention Changes 🪵

By default, audit logs are now stored in an internal audit database for 90 days in newly installed environments and upgraded environments without existing audit logs. In environments with existing audit logs, the default retention is extended to one year.

You can now change the default retention and cleanup settings for the audit database directly from Audit. You can also set up a schedule for exporting, retention, and cleanup of audit logs in a designated ONE Object Storage (MinIO) bucket. You can also manage access to the Audit module with newly added identity provider (Keycloak) roles.

Connect to Multiple Databricks Clusters from a Single DPE

You can now connect to multiple Databricks clusters from a single DPE with new plugin.metastoredatasource.ataccama.one.cluster.<clusterId> property patterns you can specify in the dpe/etc/application.properties file. To use multiple Databricks clusters, properties related to each cluster configuration should use the cluster ID associated with that cluster.

Cancel All Jobs

A Cancel all jobs button has been added to the DPM Admin Console. When selected, all jobs that are not in the state KILLED, FAILURE, or SUCCESS are cancelled and can't be resumed.

DPE Label in Run DPM Job Workflow Task

When creating a Run DPM Job workflow task, you have the option to set a DPE label that is then matched against labels assigned to available DPEs.

What are your thoughts on the latest updates and features? Anything you are excited to try? Let us know in the comments below 👇