Hi,

I am wondering to know if is good idea:

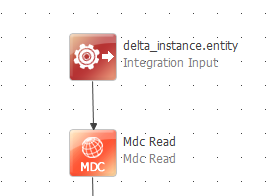

- use MDC Reader step for delta export operations where we need to complement data from entities of other layer

or

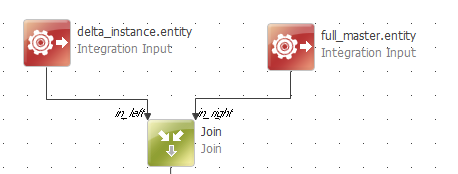

- add another Data Sources with full mode of this other entity, then use a Join step to get the information required.

Both are feasible, not sure what is better talking about batch processing, performance, etc.