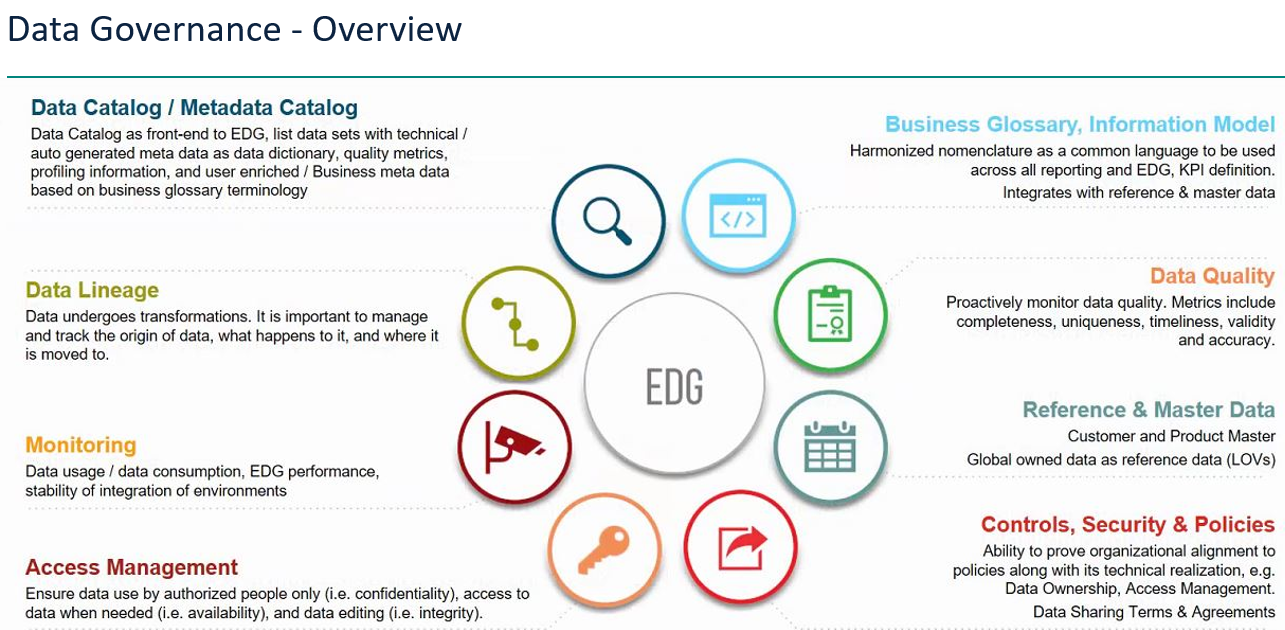

Enterprise Data Governance as the management of data across its pipeline - from production to consumption (and retirement). How to enable our “data fellows” to leverage on data to save money, save time and reduce risk?

What are the key elements to success? What are your thoughts?