Hi Ataccama community,

I’m hoping someone with better grip on the Ataccama GraphQL model can shine a light on this.

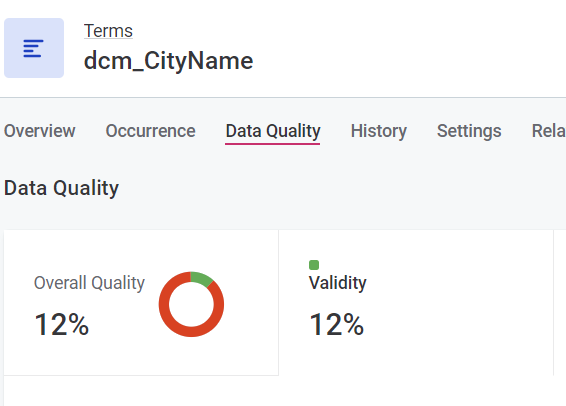

For internal reporting, we are looking to retrieve data quality details via the Ataccama GraphQL API. One of the requests it to produce an overview of the DQ score across the (rule’s) DQ dimensions. This is basically the same as is shown in the web UI when navigating to the ‘Data Quality’ tab for a Term. It shows the score broken down by dimensions, based on how the rules are defined.

What I’m trying to do is retrieve the passed/failed rows at this level, so for each term and each dimension. This would allow me to summarise this into a score per dimension.

However, I am unable to get this out of the API.

I’ve approached this from both the ‘terms’ and ‘rules’ pathway. In both approaches I always end up with the ‘Overall Quality’ score for each term - not the per dimension details.

The ‘rules’ query looks promising, but ultimately only returns the passed/failed records for the overall quality score, not specifically the rule that applies (for example the accuracy rule).

For reference, this is the attempt starting from the (list of) rules:

1query listRules {2rules (versionSelector: {draftVersion: true} filter: "implementation.$type =='ruleDqImplementation'") {3 edges {4 node {5 gid6 publishedVersion {7 name8 ruleDefinitionSource9 #description10 implementation {11 gid12 #type 13 publishedVersion {14 aggregationRule15 component16 dqDimension {17 publishedVersion {18 name19 }20 }21 }22 }23 termInstances { 24 edges {25 node {26 gid27 publishedVersion { 28 displayName29 target 30 { 31 gid32 publishedVersion {33 name34 dqEvalTermAggr {35 storedVersion {36 ruleCount37 recordCount38 invalidCount39 validCount40 }41 }42 }43 } 44 }45 }46 }47 }48 }49 }50 }51 }52}And this is the attempt starting from the list of term, trying to find out what rules apply and ideally retrieving the scores from there:

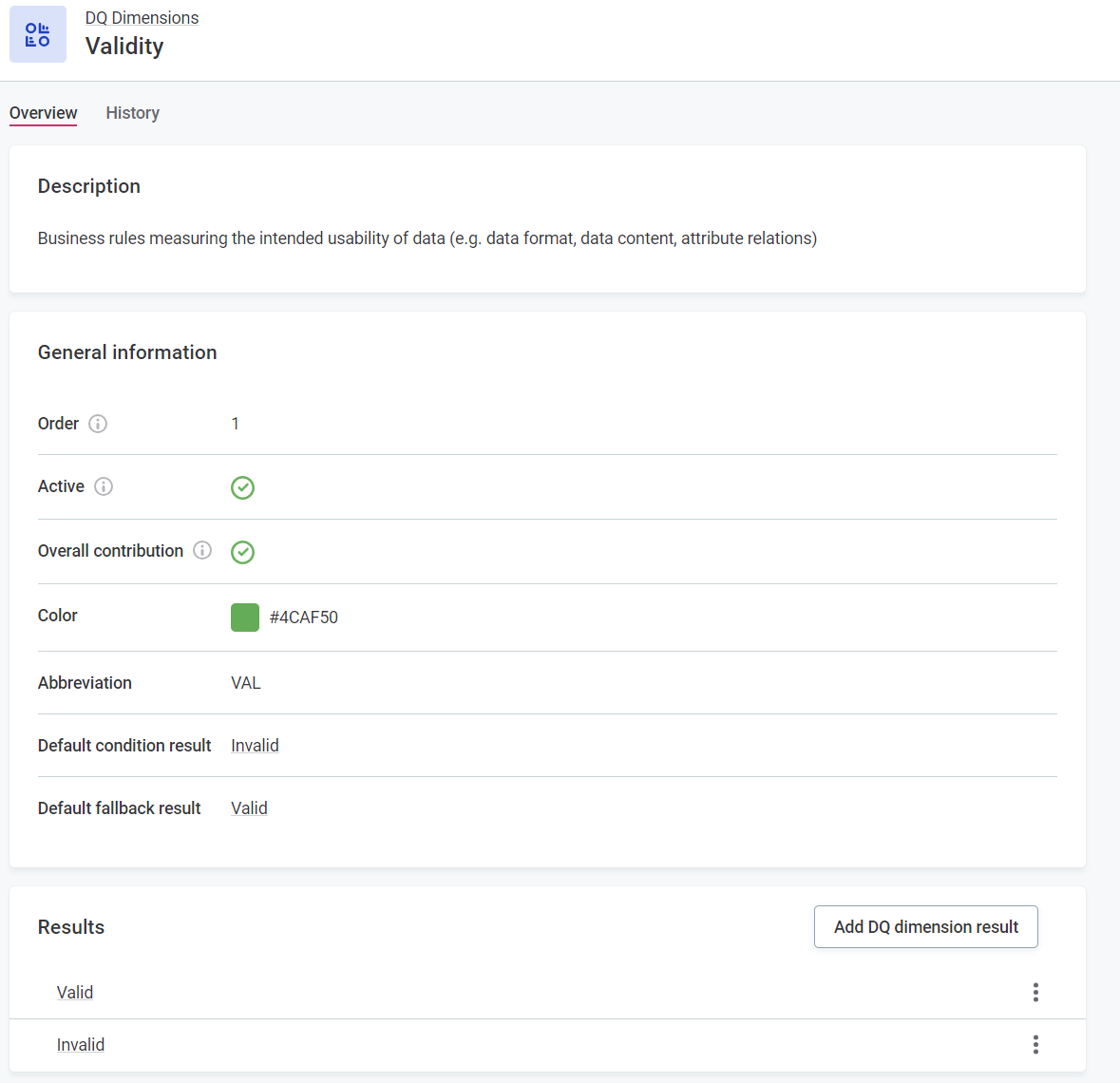

1query listGlossaryTerms {2 terms(versionSelector: { draftVersion: false }) {3 edges { # List of terms4 node{ 5 gid6 publishedVersion { # Individual term7 name8 dqEvalTermAggr {9 storedVersion {10 ruleCount11 recordCount12 invalidCount13 validCount14 }15 }16 validationRules {17 publishedVersion { 18 enabled 19 ruleInstances { #listwrapper (list of rules)20 edges {21 node { #nodewrapper22 gid23 nodePath24 storedVersion { # individual rule25 displayName 26 }27 }28 }29 }30 }31 } 32 stewardship {33 gid34 nodePath35 type36 draftVersion {37 name38 }39 }40 }41 }42 }43 }44}Does anyone know which route to take to find the scores per dimension? By looking at the page setup there seems to be a dqEvalTermOverview object that contains this, but I don’t know how to access this via GraphQL.