Issue: We receive files to be tested against our DQ rules (Monitoring Projects) every 2 weeks. Each set of files is referenced by its date created (aka 2024-10-01,2024-10-14...). When running Monitoring Projects against these items we only get the Execution Date which can be different from the file date. When extracting data for reporting from ONE we only have the Extraction Date and don’t necessarily know which file date the plan results are for (sometimes have to rerun old files for new rules etc..).

Question: Is there a way to add a custom attribute / parameter for when we run the Monitoring Project (at the processing id level) so we can report our DQ results by File date?

The 2 options we could think of:

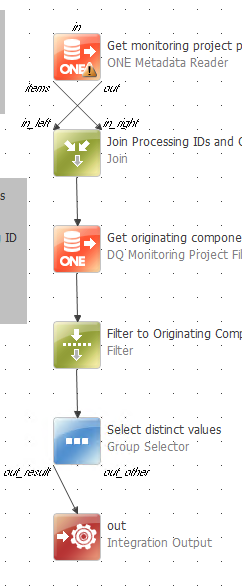

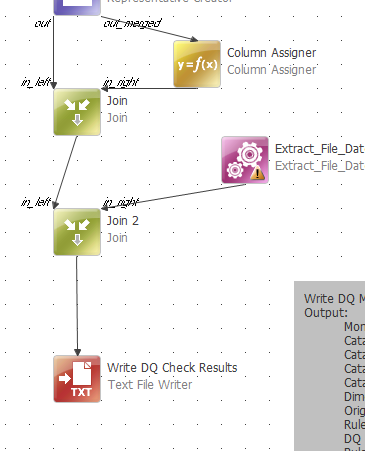

- In extraction component connect to the catalog items and get the file date which is in the table itself (tried this but we need to extract history so would need file date, catalog item, and Monitoring Project processing id to join with DQ Aggregation Results tasks in the component and it didn’t seem like that was possible (plus expensive)

- Add a custom attribute/parameter that we are prompted to fill in when running the Monitoring Project (and could set through an API call for automation) that can brought into the Monitoring Project results without having to connect to the actual catalog item data

Thanks for any help any advance!

Greg