Does anyone know how to essentially recreate a freshness check rule with the data quality rules so we can assign the DQ rules to a monitoring project? We are working in Ataccama One Web and do not want to use Ataccama One Desktop currently.

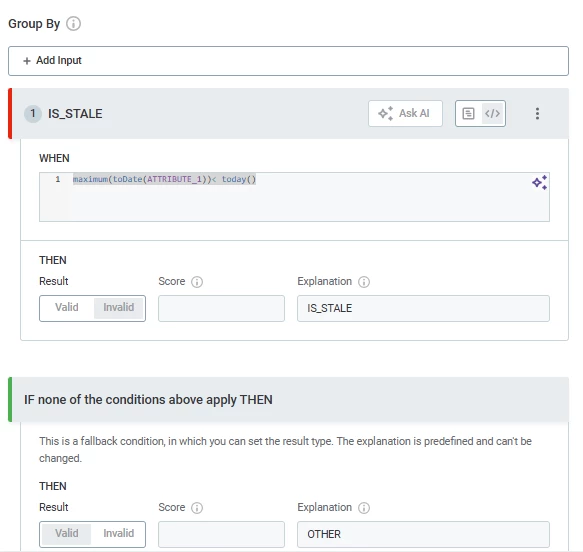

I have an attribute that has the date/time new records are loaded into our table. If we get at least one new record on the current date, then we want every single record in the table to pass. So with one record uploaded on the current date, the monitoring project will pass with 100% data quality. However, if no record is loaded on the current date, then the monitoring project will fail with 0% data quality.

What is the best approach to set up a rule like this? Thank you in advance!

We want to use the monitoring projects automation capabilities vs manually running the freshness check from the knowledge catalog every single day.