📌 Links to the legacy documentation portal are no longer available. Read about the changes and how to access our documentation here.

Hi community!

In this post, we will discover how to create server connections in ONE Desktop for various tasks and workflow steps. Read on to find out more 👀

How to register a new server?

To set up a new server connection, follow these steps:

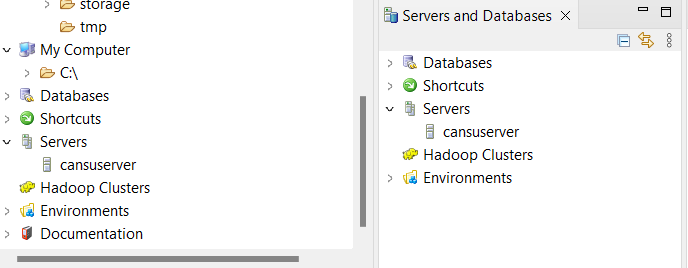

- Right-click Servers in the File Explorer and select New Server.

- Choose the desired Environment. Learn more about Environments.

- Provide a unique server Name and select the appropriate Implementation.

- Configure the server connection according to your implementation, and click Finish.

Once registered, the new server connection will appear in the File Explorer tree.

Please note that, during development, right-clicking the server connection and selecting Connect is not considered a valid connectivity test, and it's not necessary for enabling step communication.

Implementation Types

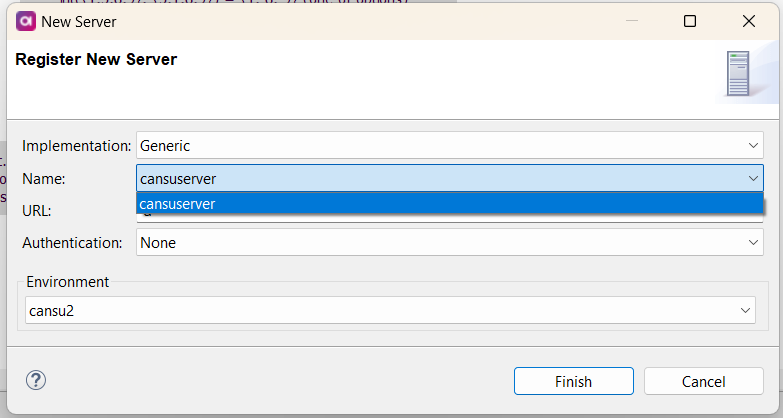

Generic

Use this implementation to connect to:

- Servers running RDM or DQIT web applications. Learn more about Importing and Exporting Issues and RDM Synchronization.

- Generic URL resources, such as specifying a remote host in the SFTP/SCP Download/Upload File workflow tasks.

Property Details:

- URL: Specifies the server URL in the format

<protocol>://<host>:<port>or<host>:<port>. The URL of the Ataccama server should be specified without a protocol. - Authentication: Choose according to the server's security settings (None, Basic, OpenID Connect).

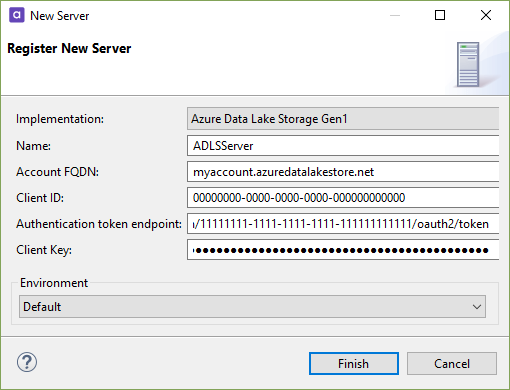

Azure Data Lake Storage Gen1

Connect to the Azure Data Lake Storage Gen1 server to upload or download files stored there. You can use this server connection in workflows and plans as an input or output with Reader/Writer steps.

Property Details:

- Account FQDN: The fully qualified domain name of the account.

- Client ID: Application (client) ID unique to the client application.

- Authentication token endpoint: URL for obtaining the access token.

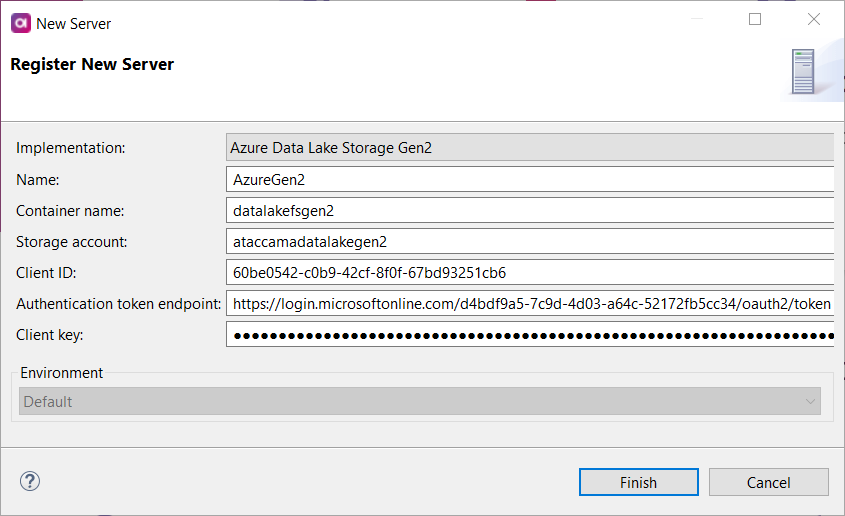

Azure Data Lake Storage Gen2

Similar to Azure Data Lake Storage Gen1, this implementation allows you to connect to the Azure Data Lake Storage Gen2 server.

Property Details:

- Container name: Name of the file system.

- Storage account: Azure Data Lake Storage Gen2 account.

- Client ID: Application (client) ID unique to the client application.

- Authentication token endpoint: URL for obtaining the access token.

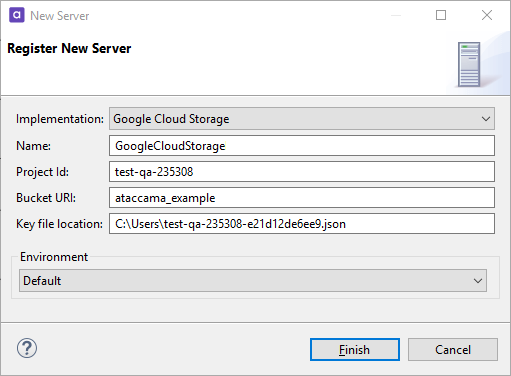

Google Cloud Storage

Connect to the Google Cloud Storage server for uploading or downloading files. Use this connection in workflows and plans.

Property Details:

- Project Id: Project Id associated with your project within Google Cloud Platform.

- Bucket URI: Bucket URI associated with your project within Google Cloud Platform.

- Key file location: UNC path to the location where your Key file (.json or .p12) is stored.

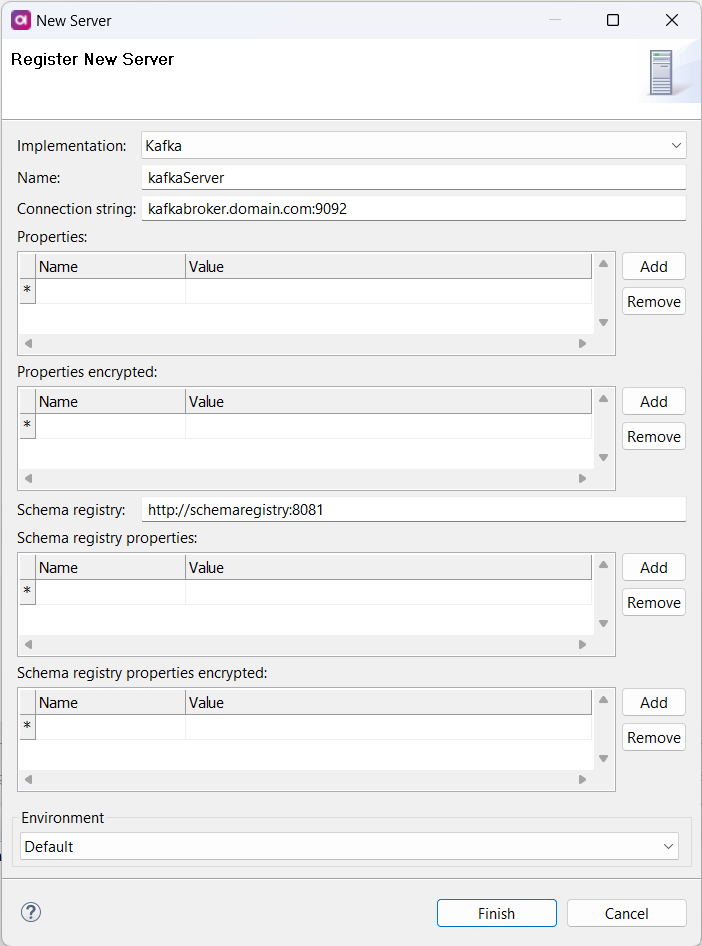

Kafka

Connect to servers running Kafka to consume or publish Kafka messages via Kafka Reader/Writer steps and Kafka online service.

And much more such as Amazon S3, Salesforce.. Continue exploring various server connection implementations to suit your needs and find out more about how to connect with them on our documentation page here.

For each server connection, remember that drag and drop functionality is supported for files/objects on Amazon S3, Azure Data Lake Storage Gen 1, and Google Cloud Storage servers.

If you have any further questions about specific implementations, feel free to ask in the comments below 👇🏻