Hi everyone!

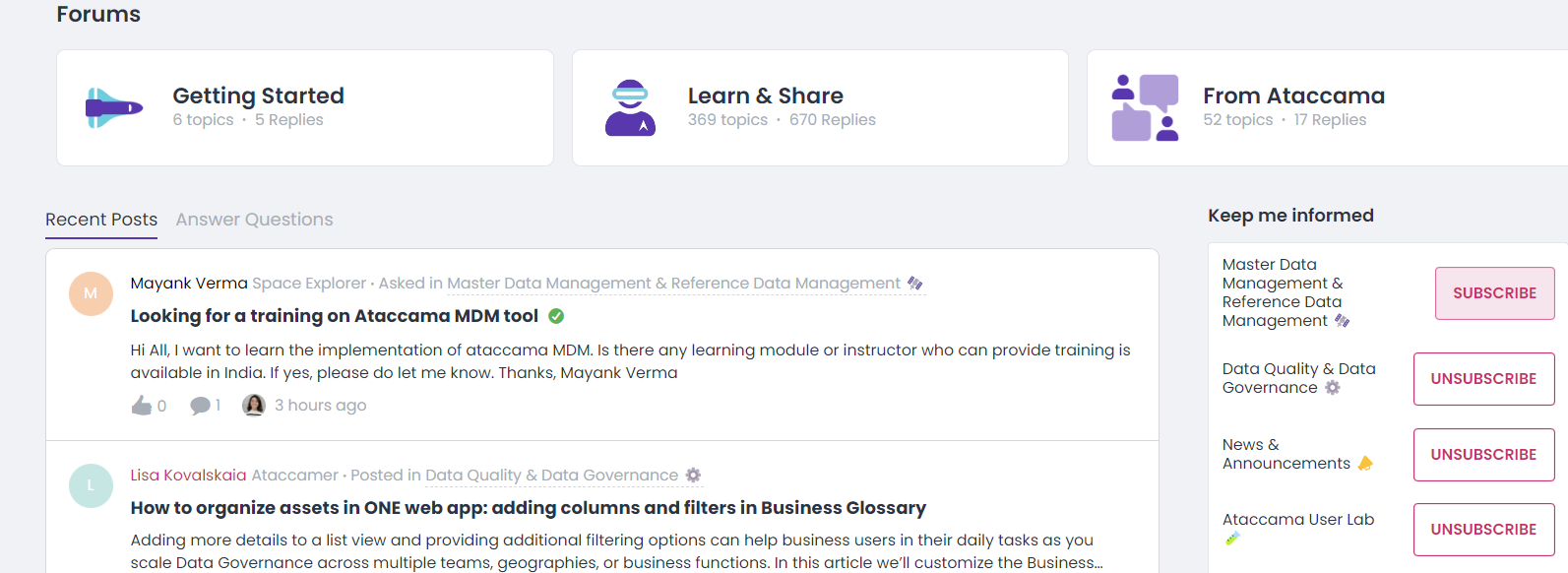

A new week means new best practices! This week we will focus on Anomaly Detection to our new Data Dictionary so if you haven’t started to follow our forums - click on Subscribe now to not miss out on any news  Easiest way to follow us is by selecting all forums you are interested to follow from our homepage and hit subscribe.

Easiest way to follow us is by selecting all forums you are interested to follow from our homepage and hit subscribe.

Now let’s dive in!

Anomaly detection is a pivotal tool for ensuring data accuracy and quality by identifying irregularities and inconsistencies within datasets.

Why Anomaly Detection Matters

Anomaly detection is your safeguard against data discrepancies and plays a crucial role in maintaining data quality and relevance. With this service, you gain the following advantages:

- Swift Issue Resolution: Users are promptly notified of potential data discrepancies or pipeline variations, enabling timely problem resolution.

- Enhanced Data Quality Monitoring: Monitor your dataset's quality to ensure its alignment with actual data conditions. This, in turn, improves the precision of generated reports and analytics for specific timeframes.

Anomaly detection operates on historical profiling versions of specified entities (catalog items and attributes) using either the Isolation Forest algorithm or time series analysis. The Anomaly Detector microservice handles anomaly detection requests from the Metadata Management Module (MMM) and returns the results upon completion.

For more information on configuring the Anomaly Detection feature, visit our guide.

Understanding Anomaly Detection

Anomaly detection fosters accurate and reliable data, evolving alongside your business processes. However, detecting anomalies is context-dependent and requires reference points within the same dataset. As data changes, anomalies shift accordingly. For instance, consider the sequence {1, 1, 2, 1, 1, 1}. Here, 2 is an anomaly. But when more values are added, such as {1, 1, 2, 1, 2, 2, 2, 1, 2, 1}, 2 ceases to be anomalous. A previously normal value can become an anomaly due to data changes.

Anomaly detection serves two purposes:

- Post Profiling Anomaly Detection: This automatic process starts after each profiling run, capturing anomalies.

- Data Quality Evaluation in Monitoring Projects: Anomaly detection contributes to data quality assessment in monitoring projects.

Two models drive the feature:

- Time-independent model, using the Isolation Forest algorithm: Default and versatile.

- Time-dependent model, employing time series analysis: Limited to monitoring projects with time-dependent anomaly configuration and ample data points.

Time-independent Model (Isolation Forest Algorithm)

This model employs the Isolation Forest Algorithm to identify anomalies by measuring data point divergence within the dataset. Anomalies stand out when they significantly deviate from other data points. The algorithm assesses multiple statistical categories for each data point. By analyzing these categories together, the algorithm identifies anomalies across dimensions.

The model further pinpoints the single most anomalous feature, such as the minimum feature in the numeric statistics category. This insight helps users understand the root cause of anomalies and take corrective actions.

Time-independent anomaly detection thrives after a minimum of 6 profiling runs and improves with more data points.

Time-dependent Model (Time Series Analysis)

This model thrives on periodicity, reflecting pattern repetitions at regular intervals. Three components define the data: trend, season, and residuals. Trend signifies the general data direction over time. Season encapsulates repeated patterns in data, while residuals represent unpredictable noise.

A minimum of 6 profiles and adherence to periodicity conditions are required for effective time-dependent anomaly detection. Irregular timestamps are accommodated, enhancing accuracy.

Processing User Feedback

Detected anomalies prompt user confirmation or rejection, improving the model over time. The time-independent model omits confirmed anomalies, while the time-dependent model replaces them with expected values, refining sensitivity.

Navigating Edge Cases

Anomaly detection adapts to edge cases:

- Empty profiles: Anomaly detection incorporates both empty and non-empty profiles.

- Profiles with mixed data types: Anomalies are identified based on profiles' data types.

Inconsistent statistics: Anomalies are detected based on common categories among profiles.

Enhancing Sensitivity and Reducing False Positives

Custom rules bolster models, enhancing anomaly detection accuracy and minimizing false positives. Models are optimized to detect nulls, unexpected values, and trends, offering improved performance.

Sizing Guidelines

Anomaly Detection's resource consumption is proportional to processed data volume. More data requires more resources. The Anomaly Detector microservice, starting at 140 MB memory (RAM), can harness multiple threads for better performance. Use caution when altering parallelism properties, seeking expert guidance.

As the microservice's memory usage correlates with profiling history size, managing profile versions aids efficiency. Our ongoing performance tests confirm that, with fewer profiling versions (under 100), the microservice's memory usage remains reasonable.

Explore Anomaly Detection's potential with the assurance of optimized performance and enhanced data integrity.

Any best practices, or thoughts? Share in the comments