Hi everyone,

Welcome back to our best practice series on MDM. In this post, we're diving deep into the features that make MDM - so buckle up

MDM’s primary feature is its model-driven architecture. It's a domain-agnostic MDM that empowers developers to craft domain-specific MDM models. Let's break down its high-level features by type.

Model-Driven Architecture

MDM's domain-agnostic approach is complemented by vertical models (metadata templates) designed to be domain-specific. These models are not cast in stone; they are customizable and extendable to meet the unique needs of your project. To delve deeper into this model-driven marvel, check out the Model.

Internal Workflow and Processing Perfection

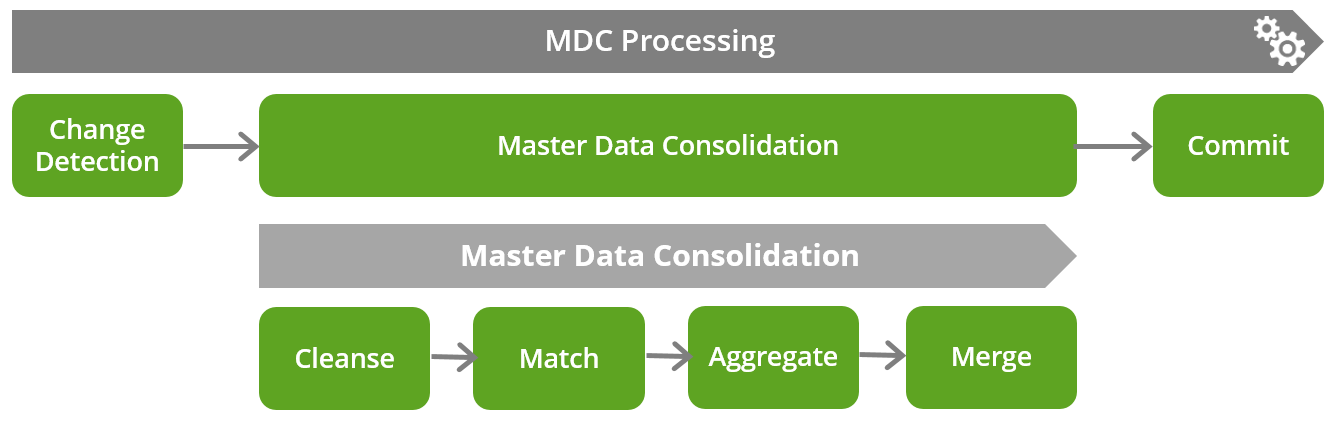

MDM boasts a remarkable set of features:

- Out-of-the-box change detection on load: MDM is a keen observer. It can detect changes in source instance records by tracking their unique source IDs. If a record has changed, it triggers the MDM process for that specific record. The granularity of delta detection goes down to the attribute level, providing developers the freedom to decide which attributes should trigger MDM processing.

- Entity-by-entity-oriented processing: MDM processes entities independently, in parallel. Should there be dependencies between entities, MDM creates them as needed based on the MDM execution plan.

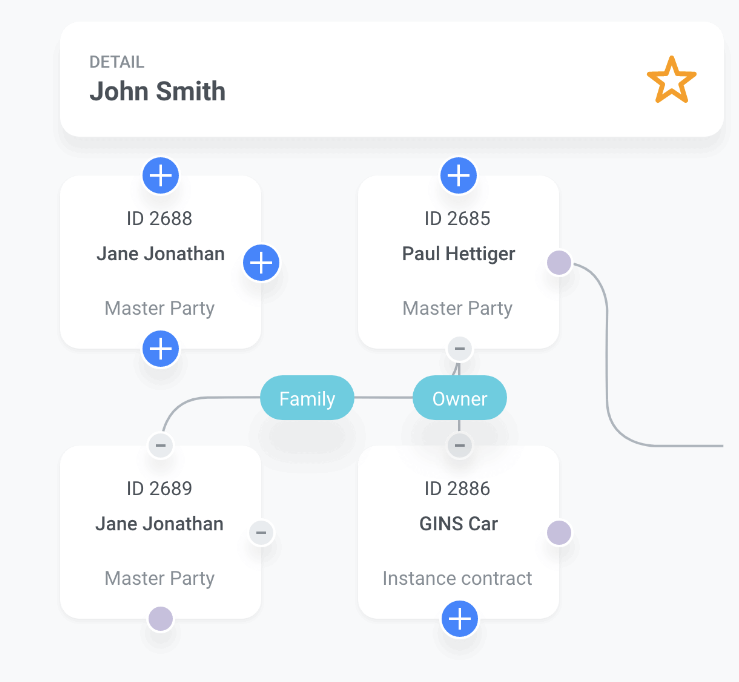

- Data transfer and linkage between entities: Entities can share their columns with other entities through their relationships. This enables the transfer of valuable data to support MDM processes. For example, you can copy values from a supporting entity to its parent entity for matching.

- Consistent MDM processes: MDM maintains uniformity in data cleansing, matching, and merging plans across all processing modes (batch, online, hybrid) and source systems. This is achieved through a common canonical model accommodating all heterogeneous representations of the same entities.

Integrated Internal Workflow with Logical Transaction

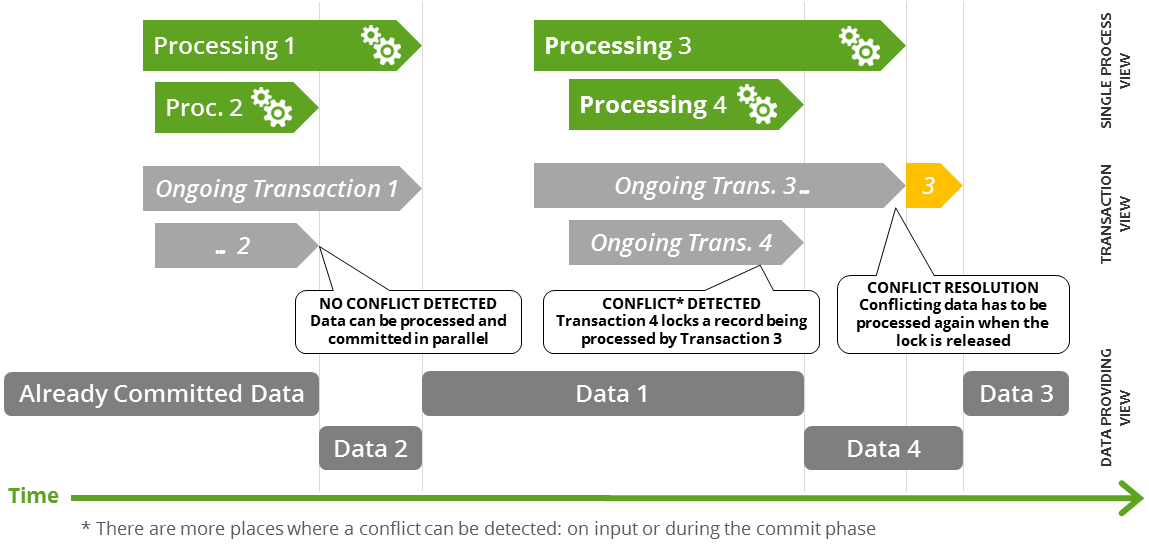

MDM's integrated internal workflow automates and orchestrates MDM processes seamlessly. It allows for:

- Coexistence of batch, stream, and online processes: MDM supports a hybrid deployment and interacts with both SOA-ready and legacy systems through batch interfaces.

- Parallel batch and online processing: Ideal for online parallel processing, this feature also enables parallel batch operations.

- Consistent data providing: Read-Only services access actual data as providers, while Read-Write services operate within the data scope of the Logical Transaction. The Logical Transaction ensures data consistency and rollback capability.

Serial Logical Transaction

In this scenario, MDM processes are serialized. The transaction can either succeed or be rolled back. The data providing is affected by the transaction's outcome.

Parallel Logical Transaction

Parallel processing is the name of the game here. It's perfect for online and/or streaming data processing. MDM can detect and resolve conflicts when needed, making transactions fully parallel when conflicts are absent.

Business Lineage and Versioning

MDM keeps historical records by supporting logical and physical deletes through housekeeping activities. You get metadata engine flags to mark different statuses of records and activate or deactivate selected records based on source system updates. Historical record updates can also be stored if activated.

What MDM provides out-of-the-box:

- Maintenance of inactive records for business lineage via logical deletes. Different system-level handling for active-inactive records.

- Change capture and publication, creating versioning history. Intra-day changes and snapshots can be published.

- Versioning records of selected entities and layers. History data can be provided by native service interfaces and batch exports.

Stream Interface

MDM's Stream Interface is your ticket to reading messages from a queue using JMS and converting them into MDM entries. The processing starts when either the defined maximum number of messages is collected or when the maximum waiting time is reached. It's all about flexibility and efficiency.

Event Handler and Event Publisher

The Event Handler and Event Publisher are your go-to tools for capturing user-defined changes during data processing. Event handlers can function asynchronously, with support for filtering specific events. They can trigger events that can be of various types, from standard output to custom channel publishers.

As a side note, the EventHandler class persists events, so it requires available persistent memory.

High Availability

MDM takes non-functional features seriously, and high availability is one of its top priorities. It supports HA in different modes (Active-Active, Active-Passive) and relies on Zookeeper. Various services are supported, depending on the mode and architecture chosen. Balancing can be secured by an external tool like BigIp F5.

While MDM provides high availability, remember that your storage platform also plays a critical role in ensuring uninterrupted service. Implementing high availability on the storage side, like Oracle RAC, is crucial to avoid single points of failure.